Steve Neavling

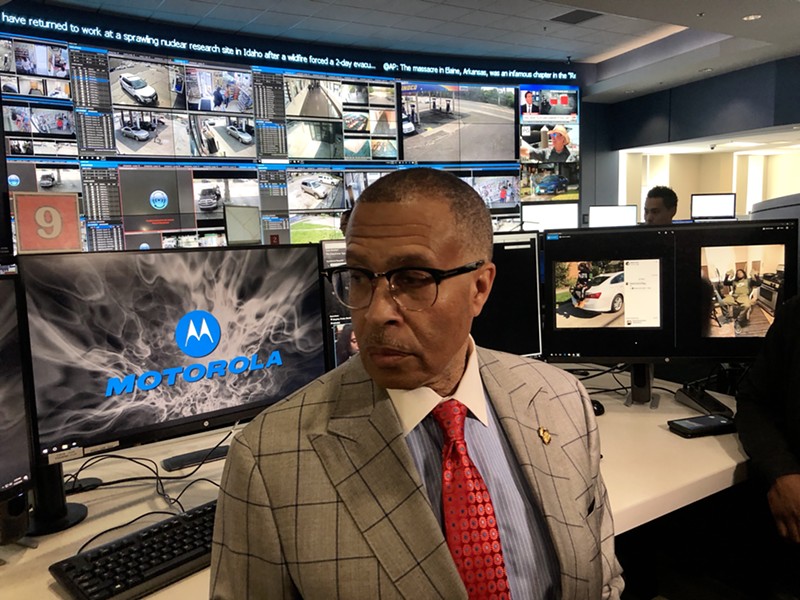

DPD Chief James Craig inside the city's Real Time Crime Center at police headquarters.

Last week, it was reported that Detroit's three-year, $1 million facial recognition technology contract was set to expire that Friday. The surveillance program has come under increasing scrutiny in recent months, with two cases of the technology leading to the arrest of innocent men coming to light. Though DPD has said it would ask City Council to extend the program, it has not yet done so.

It turns out that this isn't the end of the controversial program, however.

When asked if DPD had halted its use of the program, a spokesman for Mayor Mike Duggan tells Metro Times that software provider DataWorks agreed last year to extend its service contract through Sept. 30, 2020, giving the city an extra two months of service free of charge.

But beyond that, the contract merely refers to technical support — not the use of the software. So even if City Council opts not to renew the contract, that means DOIT, the city's IT department, would just service the DataWorks software itself.

In other words, "The city of Detroit bought and owns the DataWorks software, with the right to operate it in perpetuity," the spokesman says.

Tristan Taylor, a leader of local anti-police brutality movement Detroit Will Breathe, says the fact that the DPD would be using the technology without technical support from DataWorks is "disturbing."

"We know that it's a flawed program from the beginning," Taylor tells Metro Times. "To have that program that's already flawed, and not even have the updates it needs, that's really problematic."

The program has been criticized for showing a racial bias in identifying Black people. A Farmington Hills man named Robert Williams made national headlines last month when he was believed to have been the first person to be incorrectly arrested due to a facial recognition technology error. In January, Williams was arrested at home, in front of his family, accused of stealing merchandise from luxury watchmaker Shinola. The charges were dismissed after the officers admitted the technology appeared to have led them to the wrong guy.

"But the damage is done," Victoria Burton-Harris, who is Williams's attorney and also running in Tuesday's primary election to unseat Wayne County Prosecutor Kym Worthy, wrote in an op-ed for the ACLU. "Robert’s DNA sample, mugshot, and fingerprints — all of which were taken when he arrived at the detention center — are now on file. His arrest is on the record. Robert’s wife, Melissa, was forced to explain to his boss why Robert wouldn’t show up to work the next day. Their daughters can never un-see their father being wrongly arrested and taken away — their first real experience with the police."

It turns out Williams wasn't alone. Last year, Michael Oliver was wrongly accused of a felony for allegedly grabbing a cellphone out of a Detroit teacher's car and throwing it. In that case, the software identified Oliver as a potential lead based on cellphone video of the altercation, and the teacher fingered Oliver in a photo lineup. But again, the charges were dropped when evidence showed he was not the person in the video the software used. (Oliver has tattoos on his arm, while the man in the video did not.)

Cases using facial recognition technology must now be forwarded to Worthy's office. Worthy told the Detroit Free Press that she supported the use of the technology only for investigations, not arrests.

"In the summer of 2019, the Detroit Police Department asked me personally to adopt their Facial Recognition Policy," she said. "I declined and cited studies regarding the unreliability of the software, especially as it relates to people of color." Both Williams and Oliver are Black.

In a recent press conference, Mayor Duggan defended the use of the technology, saying it should only be used for violent crimes and only as a tool to generate leads. According to the city's new policy, a warrant should only be obtained using other evidence aside from the photo used by the facial recognition software.

Duggan said he was "very angry" in how the Williams case was handled, and apologized to Williams. He said the problem in that instance had to do with "subpar detective work," not the use of software itself.

Before the Williams case was made public, U.S. Rep. Rashida Tlaib, a vocal critic of the technology, has previously come under fire for warning Detroit Police Chief James Craig of the problem of racial bias when using the technology.

"Analysts need to be African-Americans, not people that are not," Tlaib told Detroit Police Chief James Craig during a tour of his department's Real Time Crime Center, according to a video published by The Detroit News. "I think non-African-Americans think African-Americans all look the same."

Craig suggested Tlaib's comments were racist.

"It's a software. It's biometrics," Craig told Fox & Friends. "And, to put race in it ... we're talking about trained professionals. My staff goes through intense training with the FBI, and so they're not looking at race, but it's measurements. We were appalled when she made this statement."

In a statement to Metro Times, Tlaib says she urges the Mayor to reconsider the use of the technology.

"To allow Detroit Police to use what has repeatedly been proven to be faulty and unreliable facial recognition technology, especially when used in Black and Brown neighborhoods, is a disservice to our communities. Not only that, it flies in the face of the people Detroit who have taken to the streets day after day to demand an improvement to how we protect our communities, not a regression such as this decision. I have introduced legislation in the House of Representatives to prohibit its funding and will continue to look for ways to prevent the harm it will inflict by perpetuating the racist targeting of innocent people across our country. In the meantime, I implore the Mayor to reconsider — the multiple wrongful arrests and studies showing that such an occurrence is not an anomaly when it comes to law enforcement use of facial recognition technology should be more than enough to convince any elected, law enforcement, or federal official that this is not the way to go about (actually) keeping people safe."

A number of municipalities have moved to ban facial recognition software. Boston, San Francisco, Oakland, Cambridge, Mass., and Somerville, Mass. have all enacted bans. In Michigan, lawmakers have also proposed bans.

This story was updated with a statement from Rashida Tlaib.

Stay on top of Detroit news and views. Sign up for our weekly issue newsletter delivered each Wednesday.